Committee views deepfake demo, members warn about misinformation risk and urge attention to regulation

Get AI-powered insights, summaries, and transcripts

Subscribe

Summary

A staged deepfake demonstration prompted committee members to flag how quickly artificial intelligence can fabricate speech and video; members discussed federal regulatory gaps and the need for state-level attention to misinformation and platform moderation.

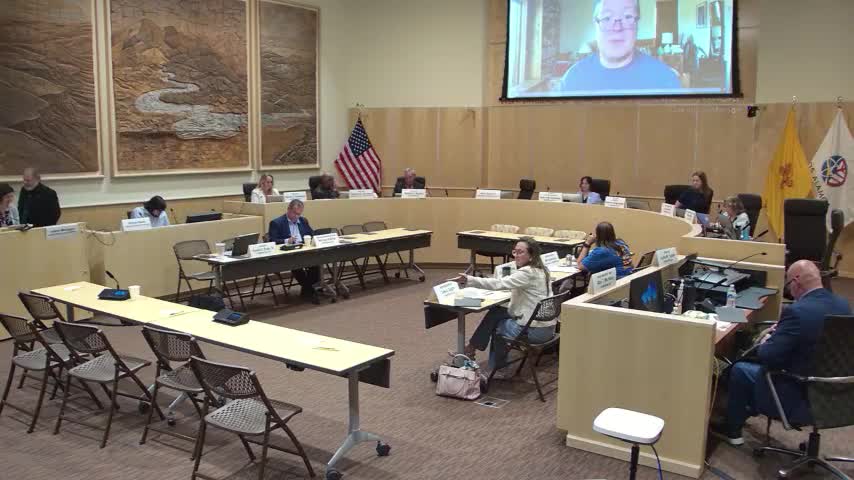

The Science and Technology Committee viewed a short deepfake demonstration that committee members and presenters said illustrated how easily AI can synthesize a person’s voice and image. The recording, presented as a demonstration, generated immediate questions from committee members about visual and audio telltales — for example, lack of blinking or lip‑synch mismatches — and sparked concerns about the spread of manipulated video and audio on social media platforms. Why it matters: committee members noted that deepfakes can put words in the mouths of public figures or be used in advertising and political messaging. One committee member referenced federal oversight gaps and said the Federal Communications Commission and other federal authorities have an unclear role in regulating some platform activity. What the committee discussed: members urged more public awareness about identifying manipulations, recommended training to spot visual and audio anomalies, and suggested the committee consider follow‑up work on whether state policy or guidance is needed. No formal action or legislation was proposed during the hearing. Attribution and limits: the demonstration and several follow‑up comments were presented live to the committee; speakers included the demonstrator who introduced the clip. Several comments in the record described how the video’s visual cues (for example, lip‑sync issues and lack of blinking) revealed manipulation; those observations were made on the record but not always attributed to named committee members in the transcript. Because some remarks could not be reliably tied to a named speaker in the transcript, this report leaves those observations unattributed. Next steps: committee members asked staff to consider whether the body should take a later procedural step — such as a staff report or a future hearing — to examine the intersection of AI synthesis tools, platform policies and state responsibilities for consumer protection and election integrity.